Shared motion is an all-purpose timing mechanism for multi-device applications. Shared motion is a simple concept with a simple API. Simplicity is generally a virtue, but it might also be puzzling to new programmers. For this reason, we recommend taking the time to form a basic understanding of the concept before actually developing any applications. This overview should be a good starting point.

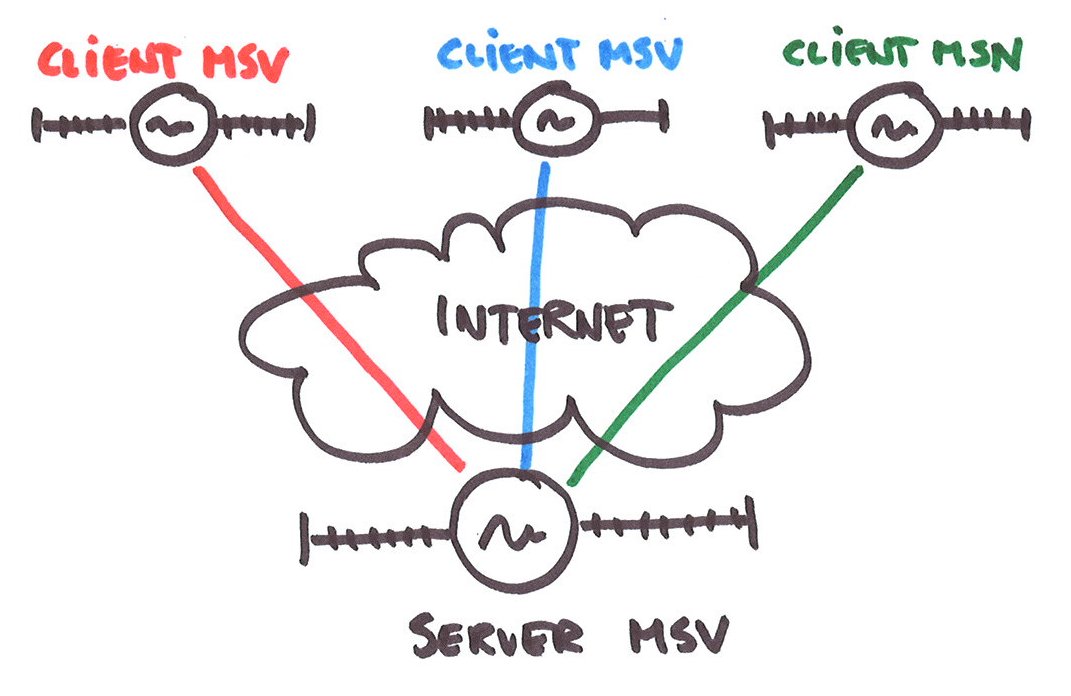

Multi-device applications depend on online resources. Such online resources usually include files, databases, or more advanced datastructures and/or services. In this respect, shared motion is just an additional type of online resource that you might want to employ.

Designing applications with shared motions is easy. As with online resources in general, you need to figure out how many motions you need, what purpose they will serve, how they will be used, and what access priviledges will be granted for different users. Once created, shared motions are always available. Shared motions can be created manually during application setup, or dynamically during application execution. Shared motions are never deleted.

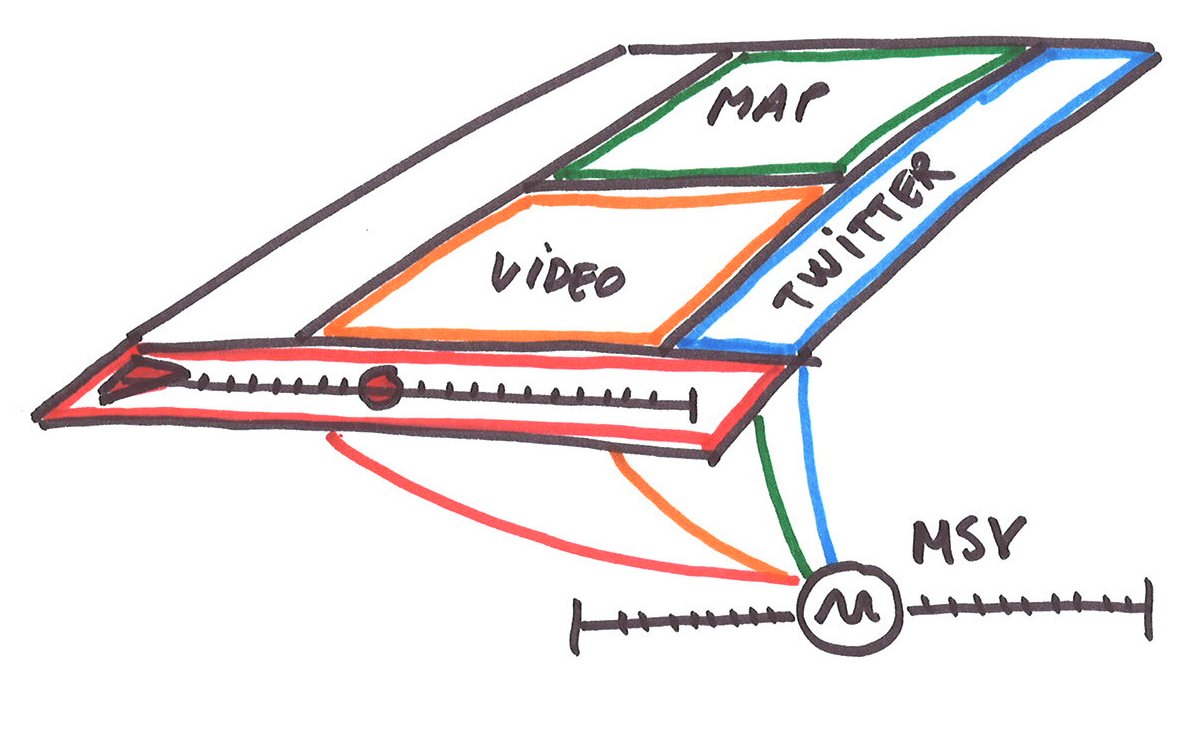

In applications, shared motions may represent variables that change predictably in time. For example, when a video is played, the video offset changes in time. By creating a Shared Motion for this variable, video offset may be shared and tighly synchronized (across Internet) between the devices of your application. Thus, a single shared motion may be basis for collaborative viewing as well as synchronization of time-sensitive "secondary device" content. In general, Shared Motion is suitable for shared control and navigation of any kind of linear media, including discrete slide-shows and maps with multi-dimensional controls.

Shared motion is fully independent of media content. This confuses some people, as they are not used to thinking about media synchronization -- without media. However, the idea is pretty simple. We synchronize shared motion across the Internet, thus allowing content to be synchronized with motion locally - at the client side. Content independence allows shared motion to be tightly synchronized, globally available, and applicable for all kinds of content types and transport protocols. Additionally, it means that existing content services may be used without modification, and that content control remains with the content providers.

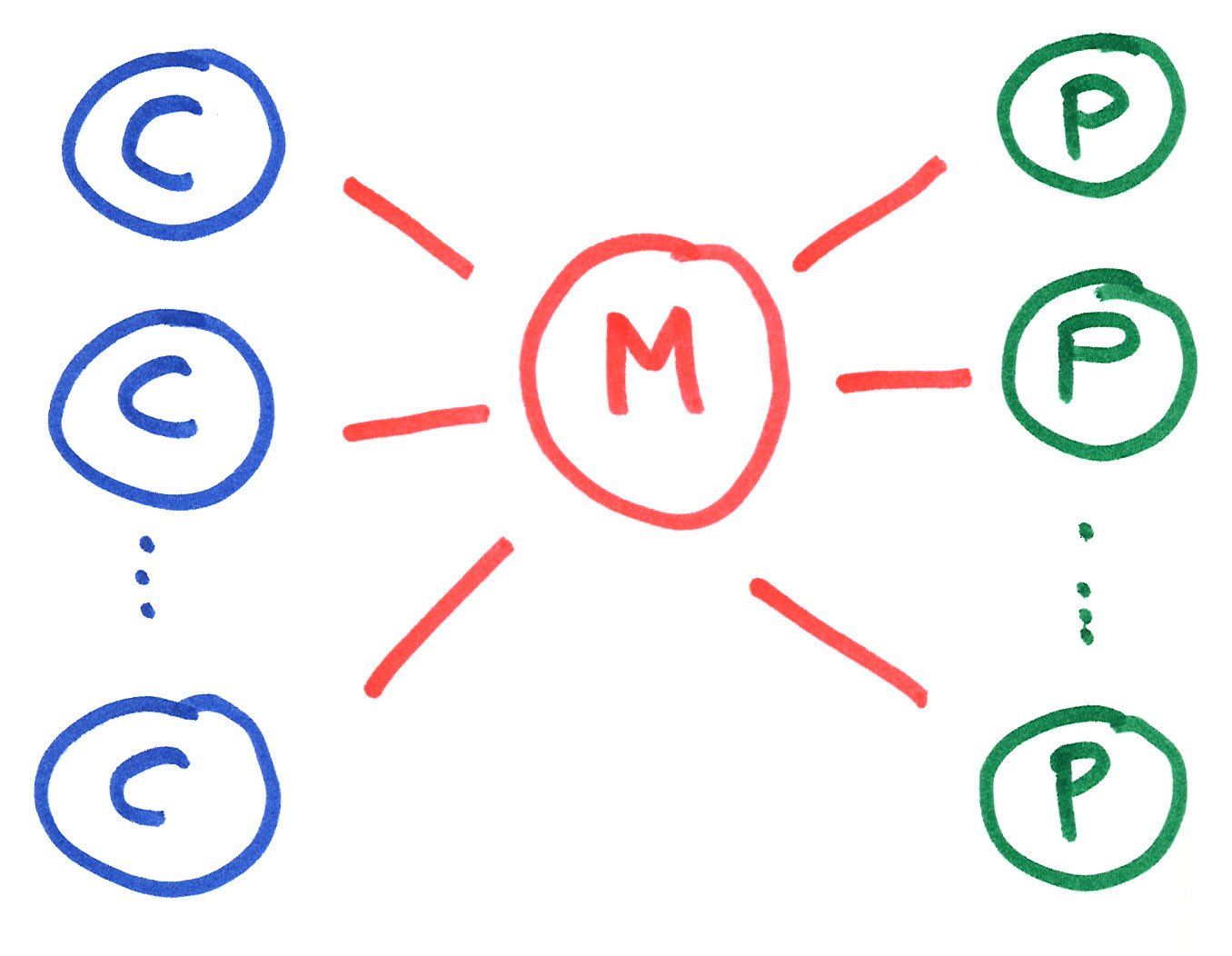

The illustration shows how clients across the Internet may connect to our motion service, mirror online motions accurately, and request motions to change. You may think of shared motions as being simultaneous on clients across the internet. Our implementation of motion synchronization approximates this quite well, usually with errors less than 20 ms. This is achieved by compensating for clock skew and variation in network delay. Clients can join and leave at any time, without affecting the motion or other clients.

The following is a basic rule for sound usage.

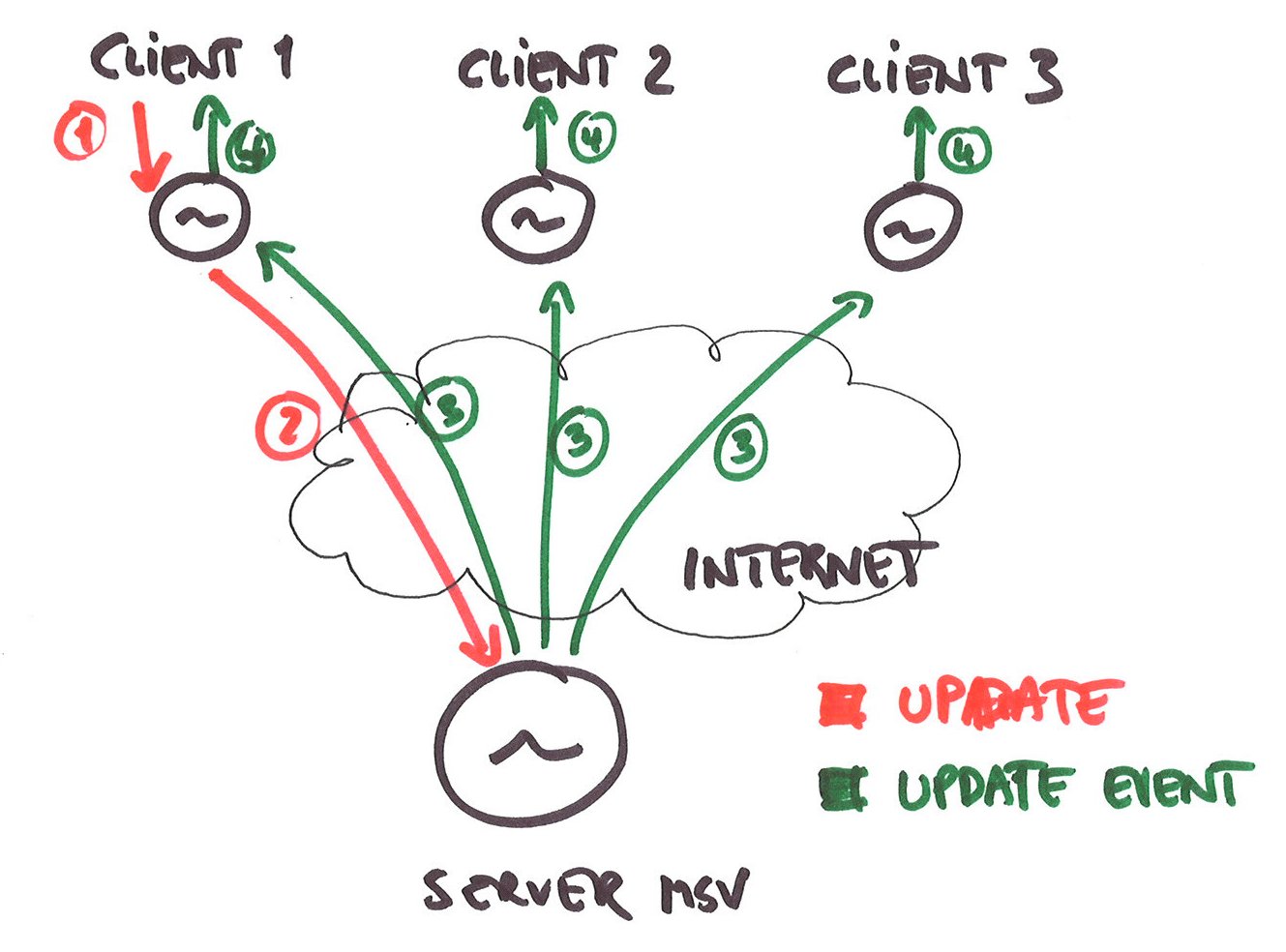

Querying the state of a shared motion is a no-cost, local operation. It is designed to be used frequently by UI components adapting to continuous motion. Note however, that excessive querying is not neccessary to quickly detect msv updates. Instead, use the update callback for this.

Updates are forwarded across the network, and cause notifications to be sent to all clients sharing the motion. This is a more expensive operation. Updating excessively in some sense goes against the basic motivation for this concept. Our services will protect against too frequent updates.

Frequent updates may arise if programmers misunderstand the concept (see Motion is Boss below) and repeatedly update a Shared Motion with new positions from a playing video, or from a button held down. Use velocity instead.

Frequent updates may also arise if two many people can navigation a shared motion. This is as much a social issue as a technical. Collaboration can be challenging once the groups grow too large. One remedy for this may be for the application to allow switching between private and shared modes. This implies that people can navigate individually without affecting the rest of the group. Both social and technical protocols exists for electing a master and passing this this responsibility around.

Trying to synchronize non-deterministic motions is another source of problems. We implement shared motion with Media State Vectors (MSV's). This technical concept is based on a deterministic model for motion, and both the accuracy and the scalability relies on this determinism.

For instance, it may be tempting to use shared motions to synchronize the motion of a mouse pointer between devices (e.g., X and Y coordinates could be bound to separate motions). However, since mouse pointers are relatively unpredictable, this will likely result in a stream of updates. Step-wise approximation may be one solution for synchronization of non-deterministic motions. Still, in the case of the mouse pointer it would probably be better to represent the stream of mouse-coordinates as a content source, and choose a better suited transport mechanism.

When using shared motion, keep in mind that motion is the boss. For example, if video offset is synchronized using shared motion, then the motion does not tell you what the offset of the video element is - but rather what it should be. This way, we can always compare reality (video element) with ideal (motion) and take necassary steps to adjust locally.

Notice also that this may have some unfamiliar consequences. For instance, when video elements experience bandwith issues, they usually pause for buffering. This is not acceptable (unless mandated by shared motion). Instead, the media presentation may go on undisturbed, and the video element will have to settle with presenting fragments of the video content. This may seem like a poor user experience. However, in a distributed scenario this is often the sensible thing to do. It means that the lousy connection of one client will not necessarily affect the rest of the clients.

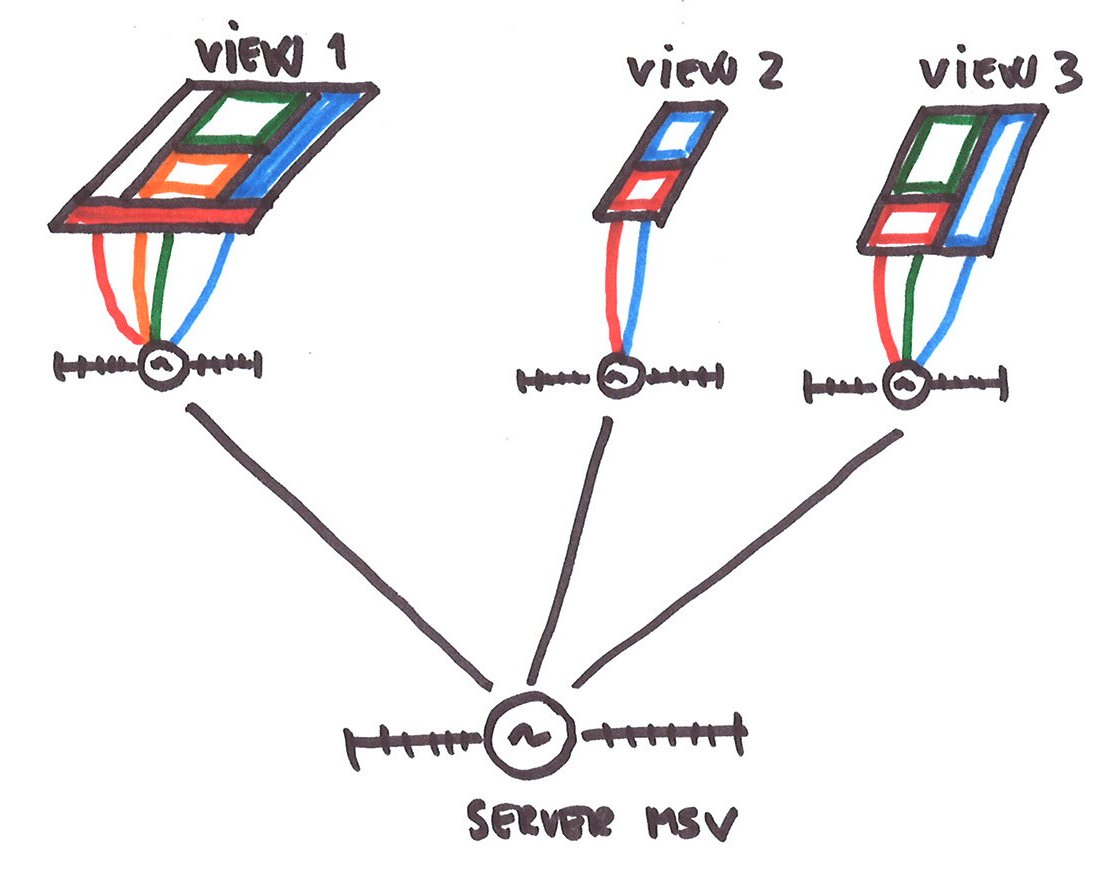

The illustration shows how shared motion can mediate between independent UI components within a single screen, or distributed on multiple devices. The UI components need not even know about eachother. Instead, all UI components monitor the shared motion, and adapts their visuals individually. This makes for a very flexible and loosely coupled design. Still, the UI components will appear to the end-user as tightly coupled parts of a single presentation.

Notice also how the play button is not owned by the video element. Instead, it is a standalone UI component controlling the entire presentation via the shared motion. This idea also extends to multi-device applications. For instance, Shared Motion allows the video controls on a mobile phone to control a video viewer on a big screen.

So, shared motion encourages a modular design, where UI components are continuously aligning their presentations to motion. Note also that such UI components can readily be used in both single-device and multi-device presentations.

Above we saw how shared motion decoupled UI components. Similarly, shared motion may also be used to decouple content sources - from each other and from presentation. Shared motion will allow different content subsystems to operate independently, yet in a coordinated manner. Furthermore, content subsystems can use motion to plan its operations in time. For example, content can be requested ahead of time, and approriate policies can be developed for batch sizes and request frequencies.

So, it is clear that this decoupling allows content sources, motions and UI components to be combined and recombined in flexible ways. It is possible to switch dynamically between different motions, for instance between private and public motions. It is also possible to switch UI elements. For instance, this will allows viewers to customize a running presentation while watching. Finally, it is possible to switch content sources dynamically. For instance, if the viewer has paused a live broadcast and resumes 5 minutes later, the live stream may dynamically be replaced by the on-demand version from the archive.

At this point, the value of conceptualizing motion as a principal entity on the Web is clear. It allows seamless interoperability between a wide variety of content sources and presentational components, as well as endless flexibility in how they are combined and recombined. The challenge then is to agree on an interface that match the requirements of a wide range of very different media applications. In this respect, we find shared motion, as implemented by the Media State Vector (MSV), a very promising candidate for standardization. The MSV is expressive and easy to use. It is already appropriate for a wide range of applications, and with certain minor extensions its applicability can be increased even more. The simple and lightweight nature of the MSV is a basis for extreme scalability as well as accurate motion-synchronization across the Internet. The simplicity also implies that motion sharing may be made available for any connected device, for any operating system, for any programming language, and for any web browser/ media application.